AutoML: Hyperparameter tuning with NNI and Keras

An introduction to Neural Network Intelligence

As part of my master thesis at Fraunhofer I work with several AutoML frameworks. There are already some articles about recent frameworks — but there is nothing about NNI (Neural Network Intelligence) from Microsoft. Due to the fact that I find this tool useful and use it in my daily work, I will give a basic introduction to NNI. For this article, I focus on hyperparameter optimization — a highly relevant topic for many developers and beginners. We can design, train and evaluate neural networks quickly and easily thanks to libraries like Tensorflow or Keras. Despite the simplicity of this process chain, one of the key questions remains:

Which hyperparameters should be selected for maximum model performance?

Even companies like Waymo or DeepMind are exposed to the optimization of hyperparameters and develop state-of-the-art algorithms. The search for the optimal hyperparameters is a tedious process that requires a lot of time and computing power. AutoML — Automated Machine Learning is a field of research for automated machine learning and includes automated methods. Besides proprietary AutoML frameworks like Google Cloud AutoML or Amazon SageMaker, open source solutions like NNI on Github are available. NNI is developed by Microsoft and offers algorithms (Grid Search, Random Search, Evolution, Hyperband, BOHB, TPE, ENAS etc.) for Neural Architecture Search (NAS) and Hyperparameter Optimization (HPO). We focus on HPO. A major advantage of NNI over other current AutoML frameworks is that it supports multiple state-of-the-art algorithms and that we need to change the existing Python code only minimally. This article does not go into the details of the various search algorithms for the purposes of simplicity.

On MacOS, Windows and Linux NNI can be installed, ideally via a virtual environment. The tool (we install the developer version on Ubuntu) is available for download from the Github page and can be installed according to the following instructions:

git clone https://github.com/Microsoft/nni.git

python3.6 -m venv venv_nni_dev

source venv_nni_dev/bin/activate

cd nni

pip install tensorflow-gpu

pip install keras

make install-dependencies

make build

make dev-install

cd ..NNI can be controlled via a console and monitored via a web interface. You can choose to run NNI locally or remotely. In local mode, the execution is performed on the current PC, whereas in remote mode the execution is usually performed on a server with fast GPUs. The following frameworks are supported: Pytorch, Keras, Tensorflow, MXNet, Caffe2, Theano and others. Three files are required:

- Python file for neural network

- Search space file

- Config file

The neural network is defined in a Python file. This file is called later by the NNI tool with various parameters from our search space. Therefore, the Python file accesses the transferred parameters and uses them accordingly. One function contains the neural network, other functions are responsible for the preparation of the data. Configurations for the test execution are stored in the config file. For example:

- Training platform (local or remote)

- Tuner (search algorithm)

- Parameters for the search algorithm

- Path to Python file

- Path to search space file

- Maximum number of all attempts

- Maximum execution time

- CPU or GPU

In this article I will discuss the simplest example — MNIST with Keras. In the example, individual values are specified for the search space. On this page further information is provided. For example, entire value domains can be searched using the algorithms. The search space is defined below (nni/examples/trials/mnist-keras/search_space.json):

{

“optimizer”:{“_type”:”choice”,”_value”:[“Adam”, “SGD”]},

“learning_rate”:{“_type”:”choice”,”_value”:[0.0001, 0.001, 0.002, 0.005, 0.01]}

}In this scenario, exactly one trial is performed at the same time. The experiment is finished after 10 trials or 1h execution time. TPE is selected as the search algorithm. The trials are executed on the local computer with a CPU. Furthermore, the search space file and the Python file with the neural network are specified. Depending on the search algorithm further parameters are required, which you can read on this page. The code below shows the config file (nni/examples/trials/mnist-keras/config.yml):

authorName: default

experimentName: example_mnist-keras

trialConcurrency: 1

maxExecDuration: 1h

maxTrialNum: 10

#choice: local, remote, pai

trainingServicePlatform: local

searchSpacePath: search_space.json

#choice: true, false

useAnnotation: false

tuner:

#choice: TPE, Random, Anneal, Evolution, BatchTuner, MetisTuner

#SMAC (SMAC should be installed through nnictl)

builtinTunerName: TPE

classArgs:

#choice: maximize, minimize

optimize_mode: maximize

trial:

command: python3 mnist-keras.py

codeDir: .

gpuNum: 0The placeholders of the search parameters are used for the neural network as follows (nni/examples/trials/mnist-keras/minst-keras.py):

Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=input_shape),

Conv2D(64, (3, 3), activation='relu'),if hyper_params['optimizer'] == 'Adam':

optimizer = keras.optimizers.Adam(lr=hyper_params['learning_rate'])

After the above points have been performed, the experiment is started with

nnictl create --config nni/examples/trials/mnist-keras/config.ymlNNI selects port 8080 as default. If this is already occupied, an alternative port can be specified with

--port=<port>NNI should display the following message:

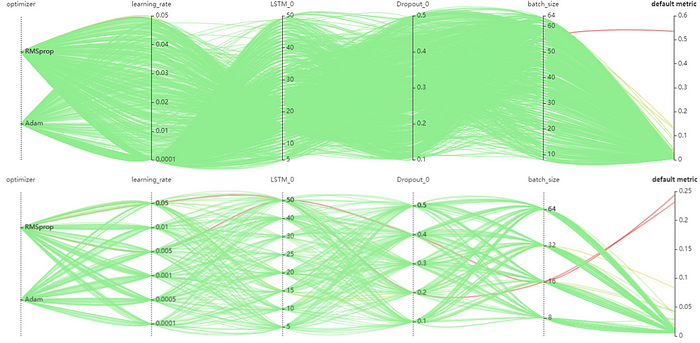

The search process can be easily monitored and controlled via the web interface using the specified link. The web interface consists of two pages. On the start page all essential information about the experiment (start time, number of attempts, progress, config file etc.) are listed. In the second tab a detailed view is given. Here, for example, all experiments with their configuration and the metrics achieved are displayed. The trials performed are listed in tabular form and can be exported as JSON files (View -> Experiment Parameters). You can sort the trials by metric, runtime, or status. Under

nni/experiments/<experiment_id>/trials/NNI creates a folder with log files for each experiment attempt. A screenshot during the experiment is shown below:

NNI is still in development, so I recommend the developer version from the Github page. A detailed documentation with many examples can be found on the official Github page. I really hope that this article helped you to understand the NNI framework. In further articles I will talk about more features of NNI.

Thank you for reading.

More useful links to NNI on Github: